Robustification of deep net classifiers by key based diversified

aggregation with pre-filtering

Code: PyTorch

If you have questions about our PyTorch code, please contact us.

The research was supported by the SNF project No. 200021_182063.

Abstract

In this paper, we address a problem of machine learning system vulnerability to adversarial attacks. We propose and investigate a Key based Diversified Aggregation (KDA) mechanism as a defense strategy. The KDA assumes that the attacker (i) knows the architecture of classifier and the used defense strategy, (ii) has an access to the training data set but (iii) does not know the secret key. The robustness of the system is achieved by a specially designed key based randomization. The proposed randomization prevents the gradients' back propagation or the creating of a "bypass" system. The randomization is performed simultaneously in several channels and a multi-channel aggregation stabilizes the results of randomization by aggregating soft outputs from each classifier in multi-channel system. The performed experimental evaluation demonstrates a high robustness and universality of the KDA against the most efficient gradient based attacks like those proposed by N. Carlini and D. Wagner and the non-gradient based sparse adversarial perturbations like OnePixel attacks

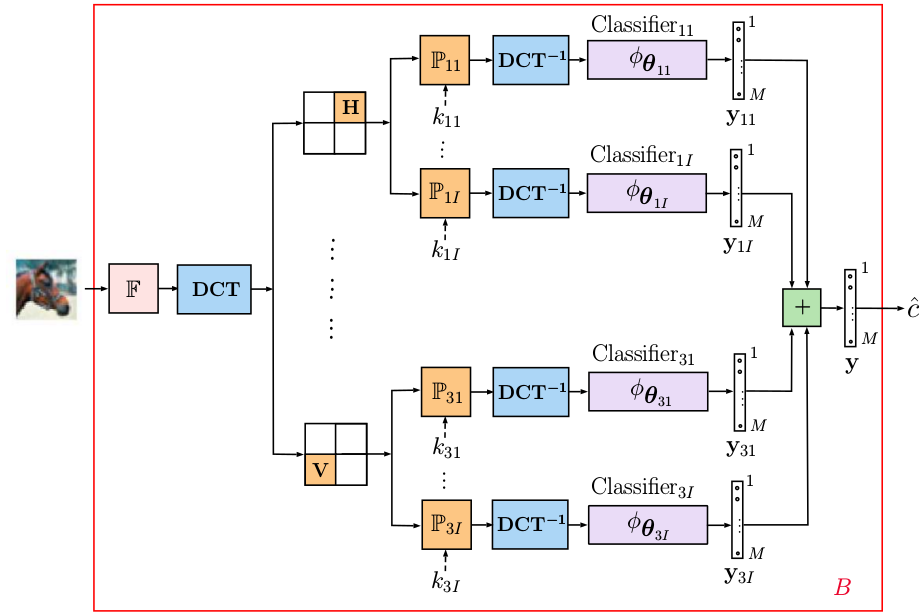

Fig.1:Classification with the local DCT sign flipping.

Experimental results

The efficiency of the proposed multi-channel architecture diversified and randomized by the key based sign flipping in the DCT domain against the adversarial attacks was tested for two scenarios:

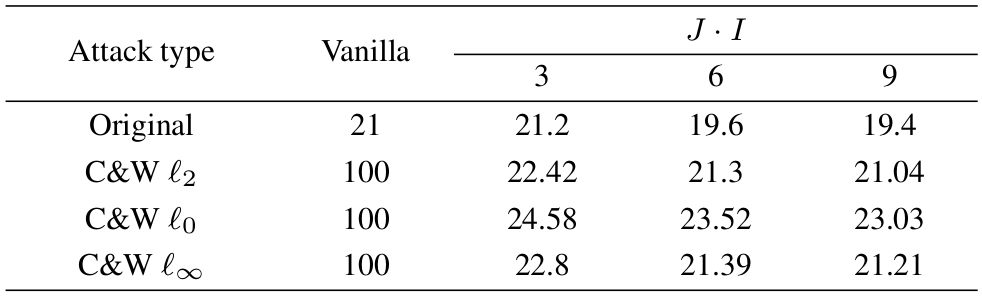

1. Gray-box gradient based attack. As a gradient based attack we use the attack proposed in [1]. This attack is among the most efficient attacks against many proposed defense strategies. Further it will be referred to as C&W. In our experiment we use the C&W attacks based on ![]() ,

, ![]() and

and ![]() norms. The obtained results are given in Table 1.

norms. The obtained results are given in Table 1.

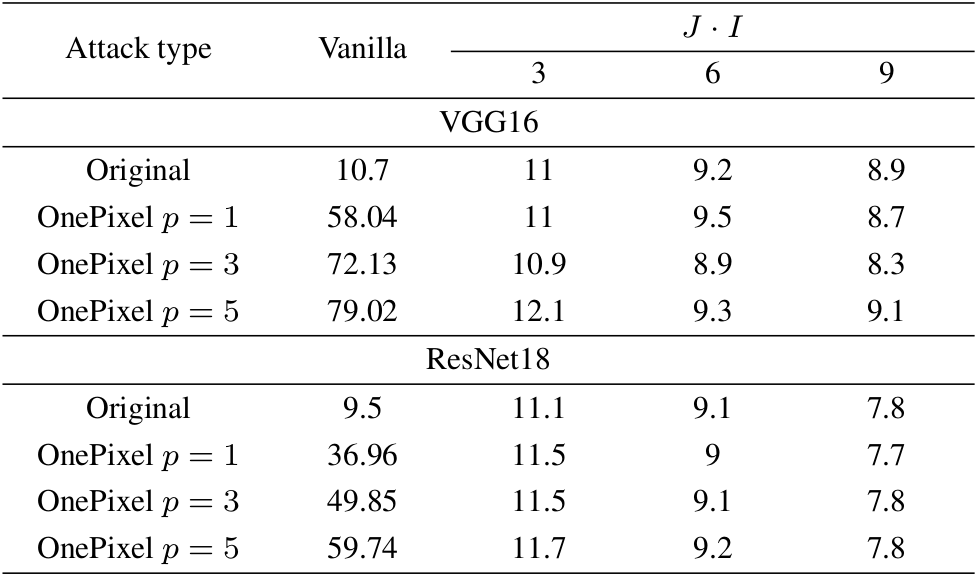

2. Black-box non-gradient based attack. As a non-gradient based attack we use the OnePixel attack proposed in [2] that uses a Differential Evolution (DE) optimisation algorithm [3] for the attack generation. The DE algorithm doesn't require the objective function to be differentiable or known but instead it observes the output of the classifier used as a black box. The OnePixel attack aims at perturbing limited number of pixels in the input image. In our experiments, we use this attack to perturb 1, 3 and 5 pixels. The corresponding results are given in Table 1.

In both tables the column ”vanilla” corre-sponds to the accuracy of the original classifier without anydefense. The row ”original” corresponds to the use of non-attacked original data.

Conclusions

In this paper, we considered the defense mechanism against the gradient and non-gradient based gray and black-box attacks. The proposed mechanism is based on the multi-channel architecture with the randomization and the aggregation of classification scores. It is remarkable that the architecture of the defense is not tailored for each class of attacks and is uniformly used for both attacks. It is also interesting to note that the diversified classification with the aggregation of the outputs of classifiers allows not only to withstand the attacks but it also improves the accuracy of vanilla classifier. It is also important to remark that the proposed approach is compliant with the cryptographic principles when the defender has an information advantage over the attacker.

For the future work we plan to extend the aggregation mechanism to more complex learnable strategies instead of used summation.