Publications

Mathematical model of printing-imaging channel for blind detection of fake copy detection patterns

IEEE International Workshop on Information Forensics and Security (WIFS), 2022

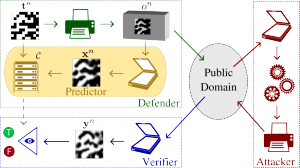

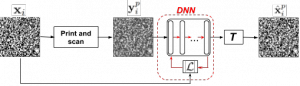

Nowadays, copy detection patterns (CDP) appear as a very promising anti-counterfeiting technology for physical object protection. However, the advent of deep learning as a powerful attacking tool has shown that the general authentication schemes are unable to compete and fail against such attacks. In this paper, we propose a new mathematical model of printing-imaging channel for the authentication of CDP together with a new detection scheme based on it. The results show that even deep learning created copy fakes unknown at the training stage can be reliably authenticated based on the proposed approach and using only digital references of CDP during authentication.

@inproceedings { Tutt2022wifs,

author = { Tutt, Joakim and Taran, Olga and Chaban, Roman and Pulfer, Brian and Belousov, Yury and Holotyak, Taras and Voloshynovskiy, Slava },

booktitle = { IEEE International Workshop on Information Forensics and Security (WIFS) },

title = { Mathematical model of printing-imaging channel for blind detection of fake copy detection patterns },

address = { Shanghai, China },

pages = { },

month = { 12 },

year = { 2022 }

}

Printing variability of copy detection patterns

IEEE International Workshop on Information Forensics and Security (WIFS), 2022

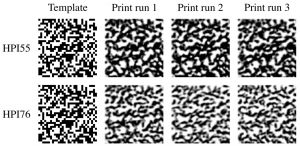

Copy detection pattern (CDP) is a novel solution for products' protection against counterfeiting, which gains its popularity in recent years. CDP attracts the anti-counterfeiting industry due to its numerous benefits in comparison to alternative protection techniques. Besides its attractiveness, there is an essential gap in the fundamental analysis of CDP authentication performance in large-scale industrial applications. It concerns variability of CDP parameters under different production conditions that include a type of printer, substrate, printing resolution, etc. Since digital off-set printing represents great flexibility in terms of product personalized in comparison with traditional off-set printing, it looks very interesting to address the above concerns for digital off-set printers that are used by several companies for the CDP protection of physical objects. In this paper, we thoroughly investigate certain factors impacting CDP. The experimental results obtained during our study reveal some previously unknown results and raise new and even more challenging questions. The results prove that it is a matter of great importance to choose carefully the substrate or printer for CDP production. This paper presents a new dataset produced by two industrial HP Indigo printers. The similarity between printed CDP and the digital templates, from which they have been produced, is chosen as a simple measure in our study. We found several particularities that might be of interest for large-scale industrial applications.

@inproceedings { Chaban2022wifs,

author = { Chaban, Roman and Taran, Olga and Tutt, Joakim and Belousov, Yury and Pulfer, Brian and Holotyak, Taras and Voloshynovskiy, Slava},

booktitle = { IEEE International Workshop on Information Forensics and Security (WIFS) },

title = { Printing variability of copy detection patterns },

address = { Shanghai, China },

pages = { },

month = { December },

year = { 2022 }

}

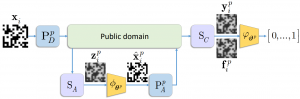

Digital twins of physical printing-imaging channel

IEEE International Workshop on Information Forensics and Security (WIFS), 2022

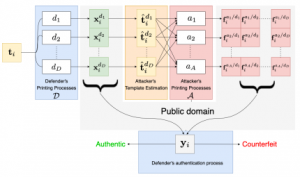

In this paper, we address the problem of modeling a printing-imaging channel built on a machine learning approach a.k.a. digital twin for anti-counterfeiting applications based on copy detection patterns (CDP). The digital twin is formulated on an information-theoretic framework called Turbo that uses variational approximations of mutual information developed for both encoder and decoder in two-directional information passage. The proposed model generalizes several state-of-the-art architectures such as adversarial autoencoder (AAE), CycleGAN and adversarial latent space auto-encoder (ALAE). This model can be applied to any type of printing and imaging and it only requires training data consisting of digital templates or artworks that are sent to a printing device and data acquired by an imaging device. Moreover, these data can be paired, unpaired or hybrid paired-unpaired which makes the proposed architecture very flexible and scalable to many practical setups. We demonstrate the impact of various architectural factors, metrics and discriminators on the overall system performance in the task of generation/prediction of printed CDP from their digital counterparts and vice versa. We also compare the proposed system with several state-of-the-art methods used for image-to-image translation applications.

@inproceedings { Belousov2022wifs,

author = { Belousov, Yury and Pulfer, Brian and Chaban, Roman and Tutt, Joakim and Taran, Olga and Holotyak, Taras and Voloshynovskiy, Slava},

booktitle = { IEEE International Workshop on Information Forensics and Security (WIFS) },

title = { Digital twins of physical printing-imaging channel},

url={http://sip.unige.ch/articles/2022/Belousov_WIFS2022.pdf},

address = { Shanghai, China },

pages = { },

month = { December },

year = { 2022 }

}

Anomaly localization for copy detection patterns through print estimation

IEEE International Workshop on Information Forensics and Security (WIFS), 2022

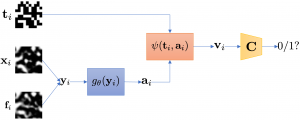

Copy detection patterns (CDP) are recent technologies for protecting products from counterfeiting. However, in contrast to traditional copy fakes, deep learning-based fakes have shown to be hardly distinguishable from originals by traditional authentication systems. Systems based on classical supervised learning and digital templates assume knowledge of fake CDP at training time and cannot generalize to unseen types of fakes. Authentication based on printed copies of originals is an alternative that yields better results even for unseen fakes and simple authentication metrics but comes at the impractical cost of acquisition and storage of printed copies. In this work, to overcome these shortcomings, we design a machine learning (ML) based authentication system that only requires digital templates and printed original CDP for training, whereas authentication is based solely on digital templates, which are used to estimate original printed codes. The obtained results show that the proposed system can efficiently authenticate original and detect fake CDP by accurately locating the anomalies in the fake CDP. The empirical evaluation of the authentication system under investigation is performed on the original and ML-based fakes CDP printed on two industrial printers.

@inproceedings { Pulfer2022wifs,

author = { Pulfer, Brian and Belousov, Yury and Tutt, Joakim and Chaban, Roman and Taran, Olga and Holotyak, Taras and Voloshynovskiy, Slava},

booktitle = { IEEE International Workshop on Information Forensics and Security (WIFS) },

title = { Anomaly localization for copy detection patterns through print estimation },

url={http://sip.unige.ch/articles/2022/Pulfer_WIFS2022.pdf},

address = { Shanghai, China },

pages = { },

month = { December },

year = { 2022 }

}

Authentication of copy detection patterns under machine learning attacks: a supervised approach

IEEE International Conference on Image Processing (ICIP), 2022

Copy detection patterns (CDP) are an attractive technology that allows manufacturers to defend their products against counterfeiting. The main assumption behind the protection mechanism of CDP is that these codes printed with the smallest symbol size (1x1) on an industrial printer cannot be copied or cloned with sufficient accuracy due to data processing inequality. However, previous works have shown that Machine Learning (ML) based attacks can produce high-quality fakes, resulting in decreased accuracy of authentication based on traditional feature-based authentication systems. While Deep Learning (DL) can be used as a part of the authentication system, to the best of our knowledge, none of the previous works has studied the performance of a DL-based authentication system against ML-based attacks on CDP with 1x1 symbol size. In this work, we study such a performance assuming a supervised learning (SL) setting.

@inproceedings { pulfer2022icip,

author = { Pulfer, Brian and Chaban, Roman and Belousov, Yury and Tutt, Joakim and Taran, Olga and Holotyak, Taras and Voloshynovskiy, Slava },

booktitle = { IEEE International Conference on Image Processing (ICIP) },

title = { Authentication of Copy Detection Patterns under Machine Learning Attacks: A Supervised Approach },

address = { Bordeaux, France },

month = { October },

year = { 2022 }

}

Machine learning attack on copy detection patterns: are 1x1 patterns cloneable?

IEEE International Workshop on Information Forensics and Security (WIFS), 2021

Nowadays, the modern economy critically requires reliable yet cheap protection solutions against product counterfeiting for the mass market. Copy detection patterns (CDP) are considered as such a solution in several applications. It is assumed that being printed at the maximum achievable limit of a printing resolution of an industrial printer with the smallest symbol size 1 × 1, the CDP cannot be copied with sufficient accuracy and thus are unclonable. In this paper, we challenge this hypothesis and consider a copy attack against the CDP based on machine learning. The experimental results based on samples produced on two industrial printers demonstrate that simple detection metrics used in the CDP authentication cannot reliably distinguish the original CDP from their fakes under certain printing conditions. Thus, the paper calls for a need of careful reconsideration of CDP cloneability and search for new authentication techniques and CDP optimization facing the current attack.

@inproceedings { Chaban2021wifs,

author = { Chaban, Roman and Taran, Olga and Tutt, Joakim and Holotyak, Taras and Bonev, Slavi and Voloshynovskiy, Slava },

booktitle = { IEEE International Workshop on Information Forensics and Security (WIFS) },

title = { Machine learning attack on copy detection patterns: are 1x1 patterns cloneable? },

address = { Montpellier, France },

pages = { },

month = { December },

year = { 2021 }

}

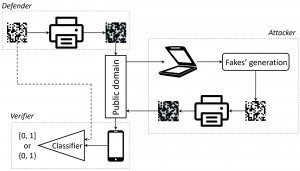

Mobile authentication of copy detection patterns: how critical is to know fakes?

IEEE International Workshop on Information Forensics and Security (WIFS), 2021

Protection of physical objects against counterfeiting is an important task for the modern economies. In recent years, the high-quality counterfeits appear to be closer to originals thanks to the rapid advancement of digital technologies. To combat these counterfeits, an anti-counterfeiting technology based on hand-crafted randomness implemented in a form of copy detection patterns (CDP) is proposed enabling a link between the physical and digital worlds and being used in various brand protection applications. The modern mobile phone technologies make the verification process of CDP easier and available to the end customers. Besides a big interest and attractiveness, the CDP authentication based on the mobile phone imaging remains insufficiently studied. In this respect, in this paper we aim at investigating the CDP authentication under the real-life conditions with the codes printed on an industrial printer and enrolled via a modern mobile phone under the regular light conditions. The authentication aspects of the obtained CDP are investigated with respect to the four types of copy fakes. The impact of fakes’ type used for training of authentication classifier is studied in two scenarios: (i) supervised binary classification under various assumptions about the fakes and (ii) one-class classification under unknown fakes. The obtained results show that the modern machine-learning approaches and the technical capacity of modern mobile phones allow to make the CDP authentication under unknown fakes feasible with respect to the considered types of fakes and code design.

@inproceedings { Taran2021IndigoMobile,

author = { Taran, Olga and Tutt, Joakim and Holotyak, Taras and Chaban, Roman and Bonev, Slavi and Voloshynovskiy, Slava },

booktitle = { IEEE International Workshop on Information Forensics and Security (WIFS) },

title = { Mobile authentication of copy detection patterns: how critical is to know fakes? },

address = { Montpellier, France },

pages = { },

month = { December },

year = { 2021 }

}

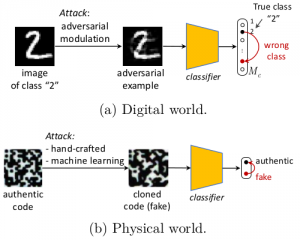

Reliable classification in digital and physical worlds under active adversaries and prior ambiguity

PhD thesis, Department of Computer Science, Faculty of Sciences, University of Geneva

Counterfeiting and piracy are among the main problems for modern society. Many traditional anti-counterfeiting technologies become quickly obsolete in view of the rapid technological progress that offers a wide range of modern high-tech tools and applications to the counterfeiters. At the same time, many new approaches to anti-counterfeiting such as printable graphical codes appear thanks to the advancement of modern mobile technologies and machine learning algorithms. The security of printable codes in terms of their reproducibility by unauthorized parties remains largely unexplored. Thesis addresses a problem of anti-counterfeiting of physical objects and aims at investigating the authentication aspects and the resistances to illegal copying of the modern printable graphical codes from machine learning perspectives. A special attention is paid to a reliable authentication on the modern mobile phones. Also the robustness to adversarial examples in the digital world and training under the limited amount of labeled data are investigated.

@phdthesis{taran2021reliable,

title={Reliable classification in digital and physical worlds under active adversaries and prior ambiguity},

author={Taran, Olga},

year={2021},

school={University of Geneva}

}

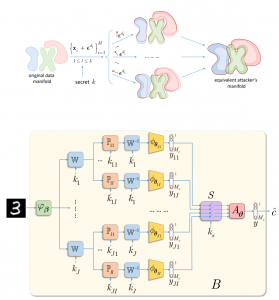

Machine learning through cryptographic glasses: combating adversarial attacks by key based diversified aggregation

EURASIP Journal on Information Security, 10 (2020)

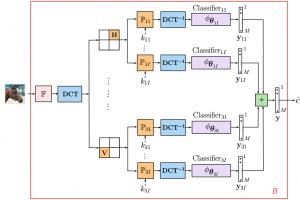

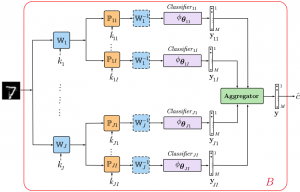

In recent years classification techniques based on deep neural networks (DNN) were widely used in many fields such as computer vision, natural language processing, self-driving cars, etc. However, the vulnerability of the DNN based classification systems to adversarial attacks questions their usage in many critical applications. Therefore, the development of robust DNN based classifiers is a critical point for the future deployment of these methods. Not less important issue is understanding of the mechanisms behind this vulnerability. Additionally, it is not completely clear how to link machine learning with cryptography to create an information advantage of the defender over the attacker. In this paper, we propose a Key based Diversified Aggregation (KDA) mechanism as a defense strategy in a gray and black-box scenario. KDA assumes that the attacker (i) knows the architecture of classifier and the used defense strategy, (ii) has an access to the training data set but (iii) does not know a secret key and does not have access to the internal states of the system. The robustness of the system is achieved by a specially designed key based randomization. The proposed randomization prevents the gradients' back propagation and restricts the attacker to create a "bypass" system. The randomization is performed simultaneously in several channels. Each channel introduces its own randomization in a special transform domain. The sharing of a secret key between the training and test stages creates an information advantage to the defender. Finally, the aggregation of soft outputs from each channel stabilizes the results and increases the reliability of the final score. The performed experimental evaluation demonstrates a high robustness and universality of the KDA against state-of-the-art gradient based gray-box transferability attacks and the non-gradient based black-box attacks

@inproceedings {Taran2020eurasip,

author = {Taran, Olga and Rezaeifar, Shideh and Holotyak, Taras and Voloshynovskiy, Slava},

booktitle = {EURASIP Journal on Information Security},

title = {Machine learning through cryptographic glasses: combating adversarial attacks by key based diversified aggregation},

url={https://jis-eurasipjournals.springeropen.com/articles/10.1186/s13635-020-00106-x},

month = { January },

year = { 2020 }

}

Adversarial detection of counterfeited printable graphical codes: towards

IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020

This paper addresses a problem of anti-counterfeiting of physical objects and aims at investigating a possibility of counterfeited printable graphical code detection from a machine learning perspectives. We investigate a fake generation via two different deep regeneration models and study the authentication capacity of several discriminators on the data set of real printed graphical codes where different printing and scanning qualities are taken into account. The obtained experimental results provide a new insight on scenarios, where the printable graphical codes can be accurately cloned and could not be distinguished.

@inproceedings { Taran2020icassp,

author = { Taran, Olga and Bonev, Slavi and Holotyak, Taras and Voloshynovskiy, Slava },

booktitle = { IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) },

title = { Adversarial detection of counterfeited printable graphical codes: towards "adversarial games" in physical world },

year = { 2020 }

}

Information bottleneck through variational glasses

NeurIPS Workshop on Bayesian Deep Learning

Information bottleneck (IB) principle [1] has become an important element in information-theoretic analysis of deep models. Many state-of-the-art generative models of both Variational Autoencoder (VAE) [2; 3] and Generative Adversarial Networks (GAN) [4] families use various bounds on mutual information terms to introduce certain regularization constraints [5; 6; 7; 8; 9; 10]. Accordingly, the main difference between these models consists in add regularization constraints and targeted objectives. In this work, we will consider the IB framework for three classes of models that include supervised, unsupervised and adversarial generative models. We will apply a variational decomposition leading a common structure and allowing easily establish connections between these models and analyze underlying assumptions. Based on these results, we focus our analysis on unsupervised setup and reconsider the VAE family. In particular, we present a new interpretation of VAE family based on the IB framework using a direct decomposition of mutual information terms and show some interesting connections to existing methods such as VAE [2; 3], β−VAE [11], AAE [12], InfoVAE [5] and VAE/GAN [13]. Instead of adding regularization constraints to an evidence lower bound (ELBO) [2; 3], which itself is a lower bound, we show that many known methods can be considered as a product of variational decomposition of mutual information terms in the IB framework. The proposed decomposition might also contribute to the interpretability of generative models of both VAE and GAN families and create a new insights to a generative compression [14; 15; 16; 17]. It can also be of interest for the analysis of novelty detection based on one-class classifiers [18] with the IB based discriminators.

@inproceedings { Voloshynovskiy2019NeurIPS,

author = { Voloshynovskiy, Slava and Kondah, Mouad and Rezaeifar, Shideh and Taran, Olga and Hotolyak, Taras and Rezende, Danilo Jimenez},

booktitle = { NeurIPS Workshop on Bayesian Deep Learning },

title = { Information bottleneck through variational glasses },

address = { Vancouver, Canada },

url={http://sip.unige.ch/articles/2019/NeurIPS_BDL_2019.pdf},

pages = { },

month = { December },

year = { 2019 }

}

Robustification of deep net classifiers by key based diversified aggregation with pre-filtering

IEEE International Conference on Image Processing (ICIP)

In this paper, we address a problem of machine learning system vulnerability to adversarial attacks. We propose and investigate a Key based Diversified Aggregation (KDA) mechanism as a defense strategy. The KDA assumes that the attacker (i) knows the architecture of classifier and the used defense strategy, (ii) has an access to the training data set but (iii) does not know the secret key. The robustness of the system is achieved by a specially designed key based randomization. The proposed randomization prevents the gradients' back propagation or the creating of a "bypass" system. The randomization is performed simultaneously in several channels and a multi-channel aggregation stabilizes the results of randomization by aggregating soft outputs from each classifier in multi-channel system. The performed experimental evaluation demonstrates a high robustness and universality of the KDA against the most efficient gradient based attacks like those proposed by N. Carlini and D. Wagner and the non-gradient based sparse adversarial perturbations like OnePixel attacks

@inproceedings { Taran2019icip,

author = { Taran, Olga and Rezaeifar, Shideh and Holotyak, Taras and Voloshynovskiy, Slava},

booktitle = { IEEE International Conference on Image Processing (ICIP) },

title = { Robustification of deep net classifiers by key based diversified aggregation with pre-filtering },

url={http://sip.unige.ch/articles/2019/ICIP2019_Taran.pdf},

address = { Taipei, Taiwan },

month = { September },

pages = { },

year = { 2019 }

}

Defending against adversarial attacks by randomized diversification

IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

The vulnerability of machine learning systems to adversarial attacks questions their usage in many applications. In this paper, we propose a randomized diversification as a defense strategy. We introduce a multi-channel architecture in a gray-box scenario, which assumes that the architecture of the classifier and the training data set are known to the attacker. The attacker does not only have access to a secret key and to the internal states of the system at the test time. The defender processes an input in multiple channels. Each channel introduces its own randomization in a special transform domain based on a secret key shared between the training and testing stages. Such a transform based randomization with a shared key preserves the gradients in key-defined sub-spaces for the defender but it prevents gradient back propagation and the creation of various bypass systems for the attacker. An additional benefit of multi-channel randomization is the aggregation that fuses soft-outputs from all channels, thus increasing the reliability of the final score. The sharing of a secret key creates an information advantage to the defender. Experimental evaluation demonstrates an increased robustness of the proposed method to a number of known state-of-the-art attacks.

@inproceedings { Taran2019cvpr,

author = { Taran, Olga and Rezaeifar, Shideh and Holotyak, Taras and Voloshynovskiy, Slava },

booktitle = { The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) },

title = { Defending against adversarial attacks by randomized diversification },

address = { Long Beach, USA },

month = { June },

pages = { },

year = { 2019 }

}

Clonability of anti-counterfeiting PGC

IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)

In recent years, printable graphical codes have attracted a lot of attention enabling a link between the physical and digital worlds, which is of great interest for the IoT and brand protection applications. The security of printable codes in terms of their reproducibility by unauthorized parties or clonability is largely unexplored. In this paper, we try to investigate the clonability of printable graphical codes from a machine learning perspective. The proposed framework is based on a simple system composed of fully connected neural network layers. The results obtained on real codes printed by several printers demonstrate a possibility to accurately estimate digital codes from their printed counterparts in certain cases. This provides a new insight on scenarios, where printable graphical codes can be accurately cloned.

@inproceedings { Taran2019icassp,

author = { Taran, Olga and Bonev, Slavi and Voloshynovskiy, Slava },

booktitle = { IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) },

title = { Clonability of anti-counterfeiting printable graphical codes: a machine learning approach },

address = { Brighton, United Kingdom },

month = { May },

pages = { },

year = { 2019 }

}

Side Effects of AI Revolution

Slava Voloshynovskiy gave a talk at "AI and the Law" conference, GENEVA – HARVARD – RENMIN – SYDNEYLAW FACULTY CONFERENCE , January 14, 2019.